As we focus our work on empowering the agent design experience, a list of the new features in the we published earlier this week, including the intent triage agent and the exact question-answering agent, utilizing Conversational language understanding (CLU) and Custom question answering (CQA). We’re excited to share new enhancements to the customization experiences in CLU and CQA that are officially available today.

Customization in AI Foundry

We’re excited to announce that both Conversational Language Understanding (CLU) and Custom Question Answering (CQA) are now fully integrated into the AI Foundry, unlocking powerful new customization capabilities for building intelligent agents. With the growing need for multi-modal intelligence, you can now manage all of your Azure AI models in a single place. Now, AI developers and business stakeholders can fine-tune custom multilingual language models with the powerful unified user experience in the AI Foundry. From fine-tuning task setup to AI model deployment, language model customization has never been more robust or more efficient.

Smart Intent Routing with CLU

The Intent Triage Agent is powered by CLU, which enables you to build custom natural language understanding models in over 100 languages to predict the overall intent of an utterance and extract important information from it quickly and accurately.

We’re announcing new features to empower your agent-building experience:

- Deploy CLU Faster with AOAI: CLU authoring now offers two options for designing and deploying your custom CLU model:

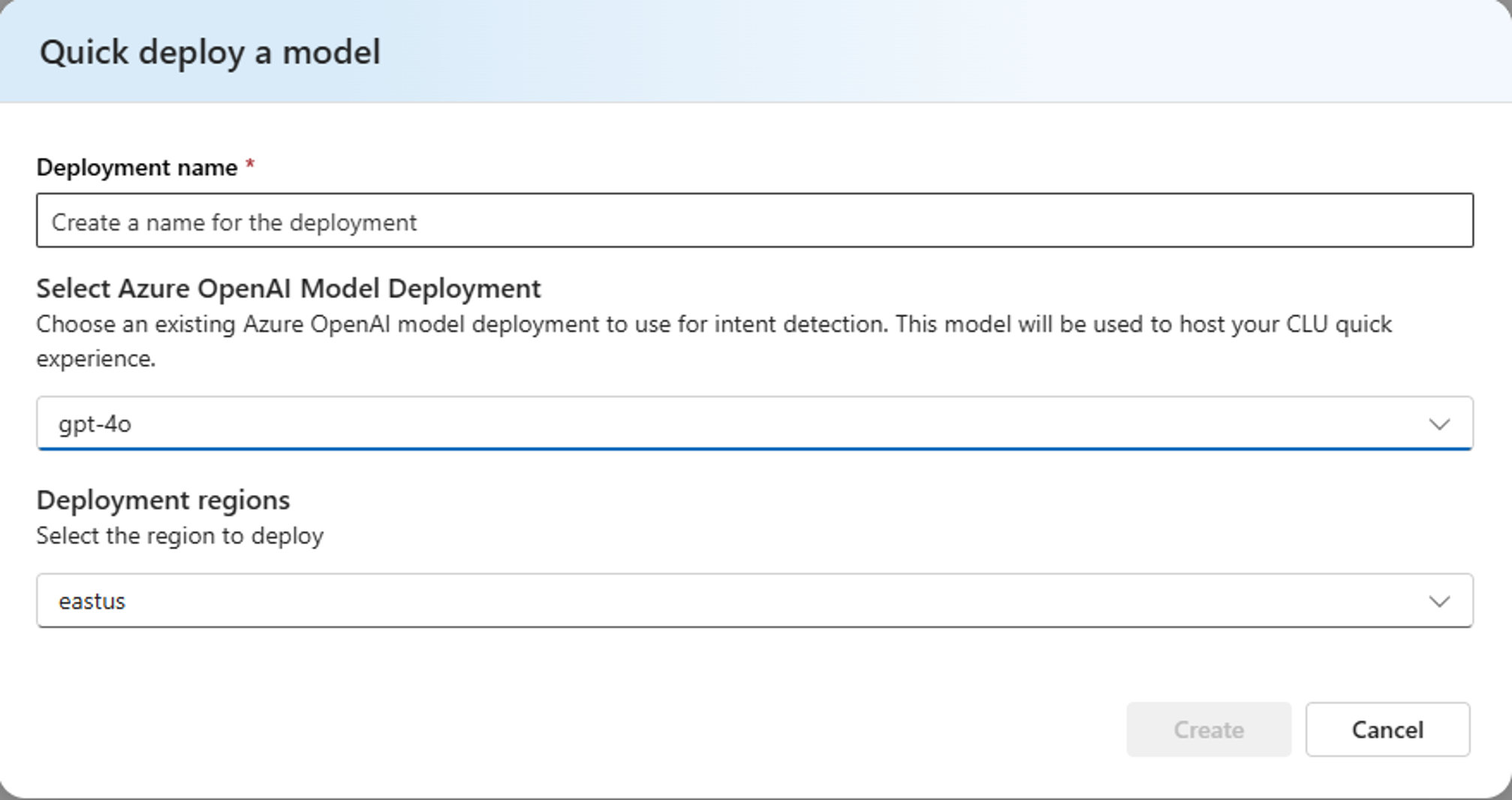

- Quick Deploy (Option #1): This new feature allows you to deploy a CLU model with just your list of intents and a plain text description of each intent. This LLM-powered configuration allows you to see your agent in action without requiring any training of machine learning models. Even with this LLM-approach, you still get access to the structured JSON CLU output that allows your agents and workflows to understand the AI prediction. This option is best to use when you want to get started quickly and want to understand a broad spectrum of topics with open-ended text.

-

-

- Here are some tips for creating intents and descriptions with the Quick Deploy option:

- Ensure your resource has the correct access. In the Azure Portal, be sure your AI Foundry resource has the Cognitive Services User role assigned to the resource containing the AOAI deployment. This access is required even if you are using a single AI Foundry resource (by providing this role assignment to itself). If you are using CLU with a Language resource, you should assign the Cognitive Services User role assignment to the AOAI Resource.

- Use clear and concise intent names. Choose intent names that resemble natural language expressions. Limit them to 2-3 words and format them using either camelCase (e.g. bookFlight) or underscore_separated (e.g. check_order_status) styles.

- Provide a focused description. Each intent should include a brief definition (ideally 1-2 sentences) that clearly explains its purpose. Aim for 50–100 characters to strike a balance between clarity and processing efficiency. However, longer descriptions are also supported if needed.

- If you’d like, include a few carefully selected example utterances for each intent to help your LLM understand how users might naturally express their intent. This is not required for the Quick Deploy option, but it can help the model predict a user’s intent even better.

- Here are some tips for creating intents and descriptions with the Quick Deploy option:

- Customization with Machine Learning (Option #2): CLU continues to offer state-of-the-art model training for when you’re looking for a context-specific, low-latency, deterministic model for more control in your intent classification. While this feature can take a little more time during model training, this machine learning approach offers additional enhancements, like model performance evaluation, prediction confidence scoring, on-prem container support, and world-class entity recognition. This option is best to use when you’re looking for more control during inference time.

-

With either model deployment option, you can see your prediction results in action in the AI Foundry playground. These features make the intent triage agent a game-changer for teams looking to scale intelligent routing across complex conversational flows.

Answer Questions with Precision with CQA

The exact question-answering agent is powered by Custom Question Answering (CQA), which lets you build AI systems that can accurately respond to user questions by extracting answers from your own content, like documents, websites, or knowledge bases, tailored to your specific business needs. In addition to the new authoring experience in the Azure AI Foundry portal that we announced earlier this week, today we’re also announcing a few enhancements to CQA to improve the model prediction experience. First, CQA now offers exact match answering, for when a user asks a question identical to a question answering pair regardless of the score. Last, CQA now supports the same scoring available in QnA Maker, providing more flexibility for you to choose between the CQA ranking algorithms and the familiar scoring logic from QnA Maker, based on your use case.

empower developers to build agents that not only understand but also respond with confidence and clarity. For more information, check out our Agents blogpost, Agent Template GitHub repo for the intent triage agent and exact question-answering agent, and Microsoft learn documentation.

0 comments

Be the first to start the discussion.